AI Legal Tools Caught Hallucinating Again!

You will be shocked to hear that between 17%- 33% of the time AI legal research tools offered by LexisNexis and Thomson Reuters (Westlaw) hallucinate. These new shocking stats come from research that Stanford University recently conducted. These figures wouldn't be astonishing with the number of AI research tools on the market, but these are just the figures of two of the most established law software companies.

The controversial part of this study is that these companies specifically CaseText, Thomson Reuters, and LexisNexis are marketing their LegalTech tools as "hallucinating free, and reducing hallucinations to nearly zero." I mean, who is going to check them on that or hold them accountable? Please take a look at some of their released statements below.

It goes without saying that as an attorney or legal professional, you must always supervise and verify AI outputs. But these stats are important for legal professionals to know how weak their assisting tools are, and we still have a long way to go in getting near-perfect legal tools or replacing lawyers.

One of the researchers wrote in a LinkedIn Post that when they released the study Thomson Reuters criticized it. They claimed the evaluated research tool (Ask Practical Law AI) was not intended for general legal research purposes. The research team should have evaluated Westlaw Precision, its dedicated AI legal research tool. Yet when the researchers asked for access to the tool, Thomson Reuters refused and told them to use Ask Practical Law AI. Only agreeing to give access to Westlaw Precision days before the research preprint was released. I think they were caught lying and will say anything to get out of this mess. This is the tool they wanted the Stanford research team to evaluate. Regardless of the tool that was evaluated, hallucinating between 17% - 33% of the time and marketing otherwise is nasty work. On top of that, Thomson Reuter's tool hallucinates nearly twice as often as the other legal AI tools that were tested.

Lexis Nexis's description of hallucination free.

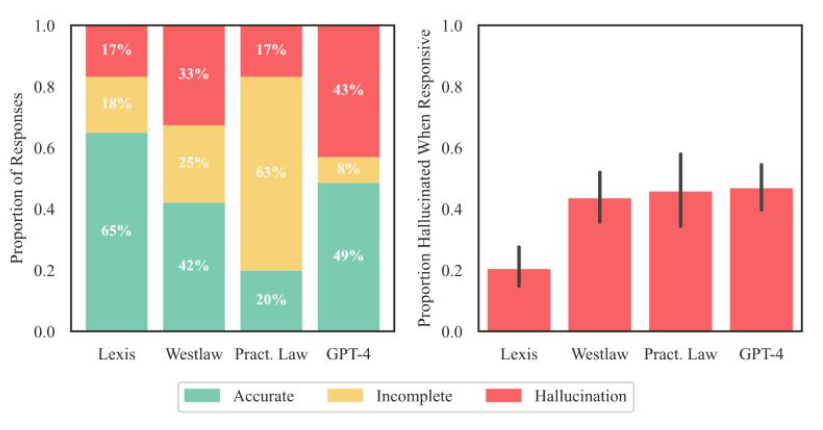

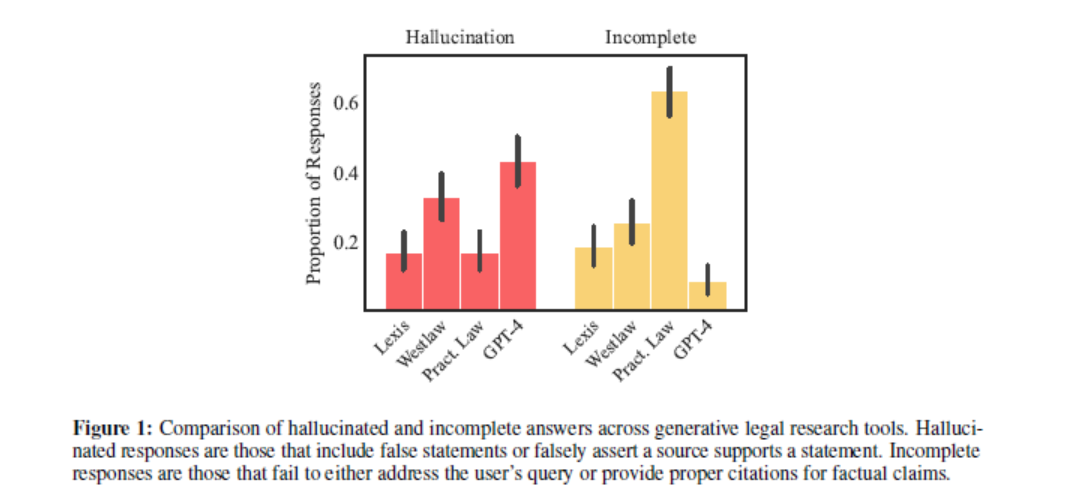

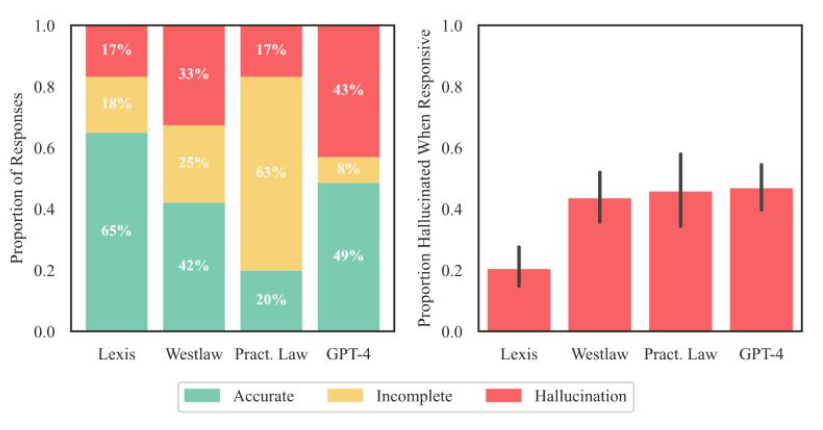

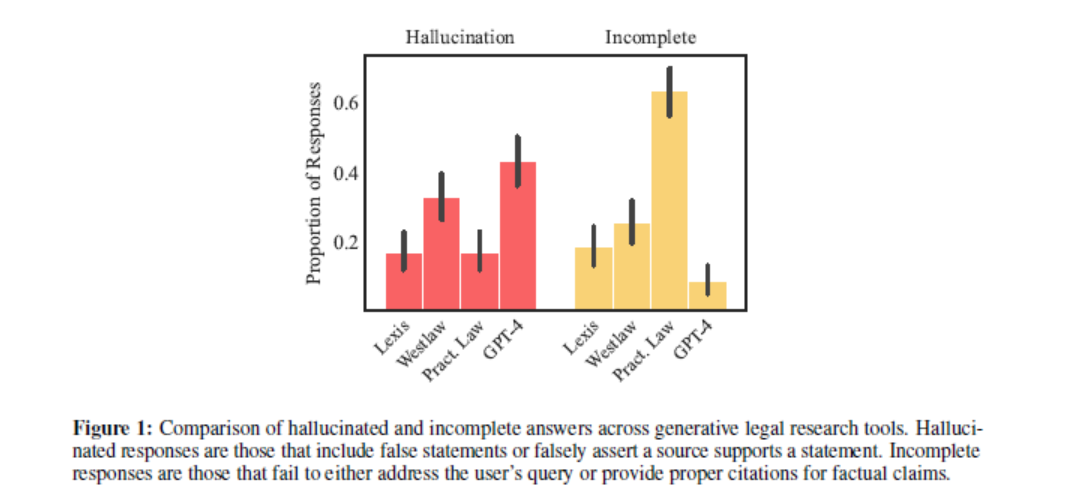

The Stanford researchers were able to test LexisNexis's AI legal research tool because the university had access to it. When tested it hallucinated 17% of the time. Way better than Westlaw at 33% and GPT-4 at 43%. But a far cry from the marketed 0% hallucinations. An accuracy rate of 65% is ok at best, and shouldn't deter firms from using the applications, it just means legal professionals must exercise extreme caution when using AI output. LexisNexis' response is that of their research tools' output all situations that have links are 100% accurate. If it doesn't have a link, they don't consider it a hallucination and you should because weary of the citation. LexisNexis also claims that they run their internal tests, are constantly improving their software, and their results are significantly better than the 17% found by the Stanford team. Notice that they didn't say 0% hallucinations? Maybe we should agree on a standard definition of what hallucinations are and have independent bodies test AI legal tools. Such testing would give a close to objective measure of which are the best tools on the market.

Are these tools any better than Chat GPT?

When these software tools were introduced to the market, the claim was that these legal tech companies had solved the major criticisms against mass LegalTech adoption and ChatGPT - which was hallucinations. These tools were touted as the ChatGPT built for lawyers and without the risks that ChatGPT carries. But touting a tool that doesn't perform significantly better than GPT-4 is nasty work (see the results below).

Who is funding bad software?

I'm curious to know if the funds that invest millions in these companies run any tests or see this kind of data. Maybe they do see the data but they are banking on lawyers not asking any questions, questionable marketing practices, addressing lawyer's fears by lying, and pointing out GPT-4's flaws. Maybe the funds that are investing in these companies are just looking for a big payout. After all, it's the lawyer who used the software that will be held accountable and not the software company and its investors. The big payout that I'm referring to is the prediction that the lawtech industry will reach a $50 billion valuation by 2027. The lawtech companies and their investors are just riding the lawtech hype train.

What's the big takeaway?

The researchers want you to know that this research paper is not an expose, no AI product is 100% accurate. What this paper is, is a call for transparency, for legal AI companies to back up their claims with empirical evidence, and to allow independent evaluations of their products. I'll also add in there a call for accurate marketing. Gambling or retail investing companies will tell you upfront that 79% of their depositors lose their money. But somehow lawtech companies can get away with saying ' Our tolls are not a substitute for legal advice and this absolves them of any responsibility.